As I sit at my desk, surrounded by code and SEO reports, I’m amazed by technical SEO’s power. It’s not just about algorithms and rankings. It’s about making digital experiences seamless, connecting businesses with their audience. Every website I’ve optimised feels like a puzzle solved, a bridge between information and seekers.

This journey through SEO has shown me that every successful online presence starts with solid technical SEO. It’s the foundation that makes it all work.

In my years as a WordPress developer and SEO expert, I’ve seen technical SEO’s impact. It’s the hidden hero of digital marketing, working behind the scenes. It ensures search engines can find, understand, and showcase your content well.

From speeding up sites to using structured data, technical SEO unlocks your website’s full digital landscape. It’s the key to success.

Let’s explore technical SEO together. We’ll look at its core parts and strategies to boost your website’s search engine performance. This guide will help you understand website optimisation and search engine optimisation, whether you’re experienced or new.

Key Takeaways

- Technical SEO enhances website crawlability and indexability, boosting search rankings

- Optimising site speed can significantly reduce bounce rates and increase conversions

- Implementing HTTPS improves trust and overall site performance

- Structured data can improve click-through rates by up to 40%

- A well-optimised XML sitemap ensures efficient indexing of important pages

- Regular technical audits can prevent critical issues affecting search traffic

Understanding Technical SEO Fundamentals

Technical SEO is key to a website’s success. It makes sure search engines can find and understand your site better. I’ll explain the main parts of technical SEO and how they help your site rank higher in search results.

What Technical SEO Encompasses

Technical SEO includes many things like website structure and how search engines can find it. It’s about making your site easy for search engines to read and understand. This means making your site fast, easy to navigate, and having the right URLs.

The Impact on Search Engine Rankings

Technical SEO is very important. Slow sites can lose up to 32% of visitors. Also, 53% of mobile users leave if a site is slow. These issues affect how well your site ranks and how users feel about it.

Core Components of Technical Optimisation

Important parts of technical SEO are:

- Site speed optimisation

- Mobile-friendliness

- Proper URL structure

- XML sitemaps

- HTTPS implementation

Using these can really help. For example, using an XML sitemap can make search engines visit your site more often. Making your site load faster can also increase sales by 20%.

By working on these technical SEO basics, you’re building a strong base. This can help your site show up more in search results and get more visitors.

Website Architecture and Structure

A well-planned website architecture is key for SEO success. A good site structure can greatly improve search rankings and user experience. Let’s look at the main parts of a good website architecture.

Creating a Flat Site Structure

A flat site structure is great for SEO. It lets users and search engines reach any page in just a few clicks. This makes it easier for them to find what they need.

URL Hierarchy Best Practices

Optimising URLs is important for a clear site structure. Use a logical format like https://example.com/category/subcategory/keyword-keyword. This helps search engines understand your content’s organisation and relevance.

Internal Linking Strategy

An effective internal linking strategy is key for site structure. It helps spread authority across your website and guides users to relevant content. Sites with smart internal linking often see better page authority and search engine performance.

| Element | Impact on SEO | User Experience Benefit |

|---|---|---|

| Flat Site Structure | Improved crawlability and indexing | Easier navigation, content discovery |

| Optimised URLs | Better understanding of content hierarchy | Clear indication of page location |

| Internal Linking | Improved authority distribution | Enhanced content discoverability |

By using these strategies, you’ll build a strong site structure. This will help both SEO and user experience. A well-organised website is like a tidy filing cabinet. It makes finding information easy for visitors and search engines.

Technical SEO

Technical SEO is key to a successful optimisation strategy. It makes sure your website is ready for search engine crawlers. Mastering these technical parts can really improve your site’s search ranking.

Let’s look at some important technical SEO parts. Site speed is very important; a 1-second delay can cause a 7% drop in conversions. So, it’s essential to follow SEO best practices for better performance.

Mobile-friendliness is also critical. A responsive design can boost user engagement by up to 20%. This shows how important it is for your site to work well on mobile devices.

Secure connections (HTTPS) are now a must. Google marks HTTP sites as ‘not secure’, which can hurt your rankings. Adding HTTPS is a basic but important technical step.

| Technical SEO Element | Impact |

|---|---|

| Site Speed | 7% conversion loss per 1-second delay |

| Mobile Responsiveness | Up to 20% increase in user engagement |

| HTTPS Implementation | Improved security and user trust |

Technical SEO isn’t a one-off task. It needs constant checking and updates. By keeping up with these technical details, you’ll build a strong base for your SEO strategy. This will help your site rank better in search engines.

Crawling and Indexing Optimisation

Improving crawling and indexing is key to making your website more visible. I’ll show you the basics of this process. We’ll look at search engine crawlers, managing crawl budget, and using XML sitemaps.

Understanding Search Engine Crawlers

Search engine crawlers, like Googlebot, search the web to find and index pages. They follow links to add new and updated content to their index. With Google’s index having hundreds of billions of pages, it’s important to optimise for these crawlers.

Managing Crawl Budget

The crawl budget is the number of pages a search engine can crawl on your site in a set time. To make the most of this, keep your site simple. Make sure important pages are no more than three clicks from the homepage. This helps search engines find and index your content well.

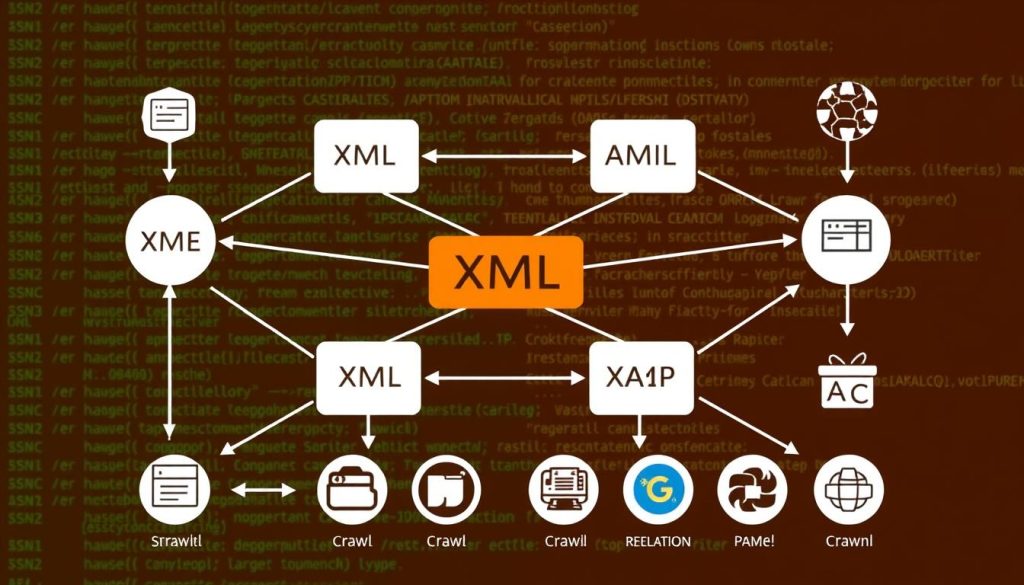

XML Sitemap Implementation

XML sitemaps are guides for search engines, showing all key pages on your site. They’re very useful for big sites or those with complex structures. A well-made XML sitemap can greatly improve how easily your site is crawled and indexed.

| Factor | Impact on Crawling and Indexing |

|---|---|

| Site Structure | Flat structure improves crawlability |

| Page Speed | Faster pages are crawled more efficiently |

| XML Sitemap | Enhances discoverability of pages |

By working on these areas, you can make your website more visible. This ensures search engines can crawl and index your content well. This could lead to better rankings in search results.

Mobile-First Indexing and Responsiveness

In today’s digital world, making your website mobile-friendly is essential. Google now focuses on mobile versions first, which is key for SEO. Let’s dive into what this means for your online presence.

Responsive design is vital. It uses the same HTML code for all devices, making it easier for search engines to index. This is now the top choice for mobile optimisation, ensuring a smooth experience for all users.

Mobile-first indexing means Google mainly looks at your mobile content. This change reflects the rise in mobile searches, now over 65% of all searches. If your site isn’t mobile-friendly, you could lose out on visibility and customers.

Mobile optimisation is no longer a luxury; it’s a necessity for businesses aiming to thrive in the digital age.

Here are some key stats on why mobile optimisation is important:

| Metric | Impact |

|---|---|

| User Retention | 61% of users unlikely to return to a problematic mobile site |

| Mobile Traffic Increase | Up to 30% after mobile-friendly upgrades |

| Bounce Rate Impact | Up to 70% increase for slow-loading mobile sites |

| Pre-Purchase Mobile Usage | 90% of consumers use smartphones for research |

These numbers show how vital mobile optimisation is today. By focusing on responsive design and mobile-first indexing, you improve your search rankings and user experience. This can also increase your conversion rates.

Site Speed and Performance

Site speed and performance are key in technical SEO. I’ll look at how fast your website loads and what affects it.

Core Web Vitals Analysis

Core Web Vitals measure how well your site works for users. They include Largest Contentful Paint (LCP), First Input Delay (FID), and Cumulative Layout Shift (CLS). Improving these can boost your site’s ranking in search results.

Page Load Time Optimisation

Fast page loading is good for users and SEO. Websites that load quickly see more sales. Here’s how to make your site faster:

- Compress and optimise images

- Implement browser caching

- Utilise a Content Delivery Network (CDN)

- Minify CSS, JavaScript, and HTML

Server Response Time

A fast server is essential for site speed. It makes sure content is delivered quickly. Think about upgrading your hosting or changing providers for better performance.

| Metric | Impact on SEO | Optimisation Technique |

|---|---|---|

| Page Load Time | Direct ranking factor | Image compression, caching |

| Core Web Vitals | User experience signal | Optimise LCP, FID, CLS |

| Server Response Time | Affects crawl efficiency | Upgrade hosting, use CDN |

Improving site speed and performance can boost your SEO. It also makes your site better for users, leading to higher rankings and more sales.

HTTPS and Security Measures

Website security is key in today’s digital world. I’ve seen that HTTPS is now vital for user trust and better search rankings. SSL certificates are key, encrypting data and proving a website’s real.

HTTPS brings many benefits:

- It makes your site more visible in search engines.

- It builds trust and keeps users engaged.

- It protects data from being intercepted.

- It meets data protection laws.

| Metric | Impact of HTTPS |

|---|---|

| Organic Traffic Increase | 11% |

| Top-ranking Websites Using HTTPS | 80% |

| User Trust | Significantly Higher |

Adopting HTTPS boosts SEO and guards against cyber threats. Google sees HTTPS as a ranking factor, helping secure sites rank better.

Securing your website with HTTPS is no longer optional; it’s a necessity for maintaining user trust and achieving optimal search engine rankings.

To set up HTTPS well, choose a trusted SSL certificate provider. Make sure to configure it right across your site. This security investment will improve user happiness and search rankings.

Managing Duplicate Content

Duplicate content is a big problem for websites wanting to rank better. About 25-30% of the web has duplicate content. It’s important to deal with this issue well. I’ll look at ways to handle duplicate content, use canonical tags, and merge your website’s content for better SEO.

Canonical Tags Implementation

Canonical tags are great for handling duplicate content. They tell search engines which page to consider the original. Google likes canonical tags more than blocking duplicate pages. This helps your preferred pages get indexed.

URL Parameter Handling

E-commerce sites often have duplicate content because of URL parameters for product variants. For example, different sizes or colours of the same product might have unique URLs. To solve this, you need a good URL parameter handling strategy. This can greatly reduce duplicate pages, saving your crawl budget and improving indexing.

Content Consolidation Strategies

Consolidating content is essential for managing duplicate content. By reorganising your website and removing unnecessary pages, you can see big improvements. For instance, Canon Europe saw a 37% increase in sessions after cutting 60% of their content. Here are some strategies to consider:

- Use 301 redirects to consolidate duplicate pages

- Apply ‘noindex’ tags to automatically generated pages (e.g., WordPress tag and category pages)

- Regularly audit your site for orphan pages and near-duplicate content

By using these strategies, you can manage duplicate content well. This will improve your site’s SEO, and search engines will correctly rank your preferred content.

| Issue | Impact | Solution |

|---|---|---|

| Duplicate content | Reduced organic traffic, diluted link metrics | Implement canonical tags, consolidate content |

| URL parameters | Excessive duplicate pages | Effective URL parameter handling |

| Orphan pages | Wasted crawl budget | Regular site audits, content pruning |

JavaScript and CSS Optimisation

In the world of technical SEO, JavaScript and CSS optimisation are key. Learning these can really help a website perform better and rank higher in search engines.

JavaScript SEO is quite complex. Google works on JavaScript in three steps: crawling, rendering, and indexing. Googlebot also queues JavaScript for later because of resource limits. This can slow down how fast your content gets indexed.

CSS optimisation is also vital for making pages load faster. Minification, which cuts down code size by removing extra characters and spaces, is a simple yet powerful method I use often.

Let’s look at how different rendering methods compare:

| Rendering Method | SEO Impact | Complexity |

|---|---|---|

| Server-Side Rendering (SSR) | Improves SEO performance | More complex to implement |

| Client-Side Rendering (CSR) | Can slow indexing | Simpler implementation |

| Dynamic Rendering | Helps with bot crawling | Not a long-term solution |

Google doesn’t index JavaScript (.js) or CSS (.css) files directly. They’re used for making webpages work. A common error is blocking .js files in robots.txt, which can stop proper crawling and indexing.

Regular checks with tools like Semrush’s Site Audit can spot JavaScript SEO problems. By using these optimisation methods, you’ll make websites that are both dynamic and SEO-friendly.

International SEO Configuration

In today’s global marketplace, international SEO is key for businesses looking to grow online. It means making websites work for different countries and languages. This ensures the right content gets to the right people.

Hreflang Implementation

Hreflang tags are a big deal in international SEO. They tell search engines which language and region a webpage is for. For example, a UK company targeting French speakers would use:

<link rel="alternate" href="http://example.com/fr" hreflang="fr-fr"/>

Using hreflang tags right can increase relevant traffic by 20%. This boosts your site’s performance in international markets.

Geotargeting Setup

Geotargeting helps you reach specific areas. You can do this with different URL structures:

- Country-code Top-Level Domains (ccTLDs): e.g., example.co.uk

- Subdomains: e.g., uk.example.com

- Subdirectories: e.g., example.com/uk/

60% of companies find better local search rankings with ccTLDs. It’s a top choice for international SEO.

Multiple Language Management

Managing multiple language versions is vital for international success. 53% of consumers are more likely to buy from brands in their language. Google suggests having one language per page to avoid confusion and boost SEO.

By following these international SEO tips, you can reach global audiences. Remember, 75% of internet users prefer websites in their own language. Localisation is a key part of your international SEO strategy.

Structured Data and Schema Markup

Structured data is a big deal for search engine optimisation. It helps search engines understand our content better. This leads to more visibility and engagement in search results.

Structured data has a big impact. For example, Rotten Tomatoes saw a 25% higher click-through rate with schema markup. The Food Network also saw a 35% increase in visits after using structured data on 80% of their pages.

Rich snippets, powered by schema markup, can make our search listings stand out. Nestlé found that pages with rich results in search have an 82% higher click-through rate. This shows how powerful structured data is for our online presence.

When we use structured data, the right format is key. Google likes JSON-LD because it’s easy to use and maintain. We need to include all required properties for our pages to show up better on Google Search.

| Company | Implementation | Result |

|---|---|---|

| Rotten Tomatoes | 100,000 pages with structured data | 25% higher click-through rate |

| Food Network | 80% of pages with search features | 35% increase in visits |

| Nestlé | Pages with rich results | 82% higher click-through rate |

By using structured data well, we’re not just seen more. We’re also giving search engines clear context about our content. This could lead to better rankings and more user engagement.

XML Sitemaps and Robots.txt

XML sitemaps and robots.txt files are key in technical SEO. They help search engines find and index your site better. This makes your site more visible in search results.

Creating Dynamic Sitemaps

XML sitemaps act as a guide for search engines to your site’s key pages. It’s wise to create dynamic sitemaps that update automatically. This keeps search engines informed about your latest content.

For the best crawl optimisation, keep your sitemap under 50,000 URLs and 50MB when uncompressed. Place it at your domain root (e.g., https://www.website.com/sitemap.xml) for easy discovery.

Robots.txt Configuration

The robots.txt file is the first stop for search engine crawlers. It tells them which parts of your site to crawl and which to ignore. Proper configuration is key for effective crawl optimisation.

A well-structured robots.txt file can significantly improve your site’s crawl efficiency and search engine performance.

Crawl Directives

Crawl directives in your robots.txt file and XML sitemaps guide search engines on how to crawl your site. Remember, these are suggestions, not strict rules. For sensitive content, use the ‘noindex,nofollow’ meta tag on individual pages to prevent indexing.

By mastering XML sitemaps, robots.txt configuration, and crawl directives, you’ll enhance your site’s discoverability. This improves its chances of ranking well in search results.

404 Error Management and Redirects

Managing 404 errors and setting up a good redirect strategy are key in technical SEO. They greatly affect how users feel and how well your site ranks. I’ll show you how to find and fix broken links, keeping your site’s SEO strong.

404 errors happen when people or search engines try to reach pages that don’t exist. While a few errors are okay, too many can hurt your site. Studies say 50% of users leave if a page takes over 3 seconds to load. So, managing redirects well is very important.

To solve this, a detailed redirect plan is needed. 301 redirects are best for SEO, keeping about 90-99% of link value. On the other hand, 302 redirects don’t keep link value, which can harm your SEO if used wrong.

Link reclamation is also key in handling 404 errors. By finding and fixing broken links, you can get back lost link value. This makes your site better for users and keeps your SEO strong.

| Redirect Type | Link Equity Passed | SEO Impact |

|---|---|---|

| 301 Redirect | 90-99% | Highly Positive |

| 302 Redirect | 0% | Potentially Negative |

| Meta Refresh | Varies | Negative (40% slower load times) |

Good error handling can cut 404 errors by about 70% in just a month. This can make your site more appealing, leading to more clicks and less people leaving quickly. This boost can help your site rank better in search results.

Technical SEO Audit Process

A technical SEO audit is key to keeping your website visible in search engines. Regular audits can find hidden problems that stop your site from ranking well. They also help you know which fixes to do first for the best results.

Essential Audit Tools

To do a full SEO audit, I use several top SEO tools. Screaming Frog is great for crawling up to 500 URLs for free. Google Search Console shows crawl errors and Core Web Vitals scores.

Issue Prioritisation

After the technical analysis, it’s important to sort issues by how big they are. I tackle the biggest problems first. These include:

- Indexation errors

- Robots.txt issues

- XML sitemap problems

- Page speed optimisation

Implementation Timeline

Having a plan for when to fix each issue helps a lot. Here’s a plan I follow:

| Week | Task | Priority |

|---|---|---|

| 1 | Fix robots.txt errors | High |

| 2 | Optimise XML sitemap | High |

| 3-4 | Improve page speed | Medium |

| 5-6 | Resolve mobile-friendly issues | Medium |

By sticking to this plan, I keep my website’s technical SEO in top shape. This helps it rank better in search engines and gives users a better experience.

Conclusion

I’ve seen how technical SEO can change a website. The world of search engine optimisation keeps changing. To stay ahead, you need a complete SEO plan.

Technical SEO is key. A 1-second delay can cut conversions by 7%. But, good technical SEO can boost organic traffic by up to 40%. These numbers show how important website speed and crawlability are.

Using the tips from this guide can improve your website’s visibility and user experience. More than 60% of websites face technical issues. Regular checks, mobile optimisation, and structured data can make a big difference. A strong technical SEO base is essential for lasting success in the digital world.